Fermionic Green’s function or self-energy

Note

One can continue a self-energy function as long as it does not contain a static Hartree-Fock contribution (i.e. decays to 0 at \(\omega\to\infty\)). In this case norms must be precomputed separately as first spectral moments of the self-energy.

For derivation of spectral moments see, for instance,

"Interpolating self-energy of the infinite-dimensional Hubbard model:

Modifying the iterative perturbation theory"

M. Potthoff, T. Wegner, and W. Nolting, Phys. Rev. B 55, 16132 (1997)

Perform analytic continuation using the FermionGf kernel

# Import HDFArchive and some TRIQS modules

from h5 import HDFArchive

from triqs.gf import *

import triqs.utility.mpi as mpi

# Import main SOM class and utility functions

from som import Som, fill_refreq, compute_tail, reconstruct

n_tau = 500 # Number of \tau-slices for the input GF

n_w = 801 # Number of energy slices for G(\omega)

energy_window = (-4.0, 4.0) # Energy window to search the solution in

tail_max_order = 10 # Maximum tail expansion order to be computed

# Parameters for Som.accumulate()

acc_params = {'energy_window': energy_window}

# Verbosity level

acc_params['verbosity'] = 2

# Number of particular solutions to accumulate

acc_params['l'] = 1000

# Number of global updates

acc_params['f'] = 100

# Number of local updates per global update

acc_params['t'] = 50

# Accumulate histogram of \chi

acc_params['make_histograms'] = True

# Read G(\tau) from an archive.

# Could be G(i\omega_n) or G_l as well.

g_tau = HDFArchive('input.h5', 'r')['g_tau']

# g_tau stored in input.h5 has a dense mesh with 10001 slices

# Prepare input data: Reduce the number of \tau-slices from 10001 to n_tau

g_input = rebinning_tau(g_tau, n_tau)

# Set the error bars to a constant (all points of g_tau are equally important)

error_bars = g_input.copy()

error_bars.data[:] = 0.01

# Construct a SOM object

#

# Expected norms of spectral functions can be passed to the constructor as

# norms = [norm_1, norm_2, ..., norm_M], where M is the target dimension of

# g_input (only diagonal elements will be continued). All norms are set to 1.0

# by default.

cont = Som(g_input, error_bars, kind="FermionGf", norms=[1.0, 1.0])

# Accumulate particular solutions. This may take some time ...

cont.accumulate(**acc_params)

# Construct the final solution as a sum of good particular solutions with

# equal weights. Good particular solutions are those with \chi <= good_d_abs

# and \chi/\chi_{min} <= good_d_rel.

good_chi_abs = 0.06

good_chi_rel = 2.0

cont.compute_final_solution(good_chi_abs=good_chi_abs,

good_chi_rel=good_chi_rel,

verbosity=1)

# Recover G(\omega) on an energy mesh.

# NB: we can use *any* energy window at this point, not necessarily that

# from 'acc_params'.

g_w = GfReFreq(window=(-5.0, 5.0),

n_points=n_w,

indices=g_tau.indices)

fill_refreq(g_w, cont)

# Do the same, but this time without binning.

g_w_wo_binning = GfReFreq(window=(-5.0, 5.0),

n_points=n_w,

indices=g_tau.indices)

fill_refreq(g_w_wo_binning, cont, with_binning=False)

# Compute tail coefficients of G(\omega)

tail = compute_tail(tail_max_order, cont)

# G(\tau) reconstructed from the solution

g_rec_tau = g_input.copy()

reconstruct(g_rec_tau, cont)

# On master node, save parameters and results to an archive

if mpi.is_master_node():

with HDFArchive("results.h5", 'w') as ar:

ar['acc_params'] = acc_params

ar['good_chi_abs'] = good_chi_abs

ar['good_chi_rel'] = good_chi_rel

ar['g_tau'] = g_tau

ar['g_rec_tau'] = g_rec_tau

ar['g_w'] = g_w

ar['g_w_wo_binning'] = g_w_wo_binning

ar['tail'] = tail

ar['histograms'] = cont.histograms

Download input file input.h5.

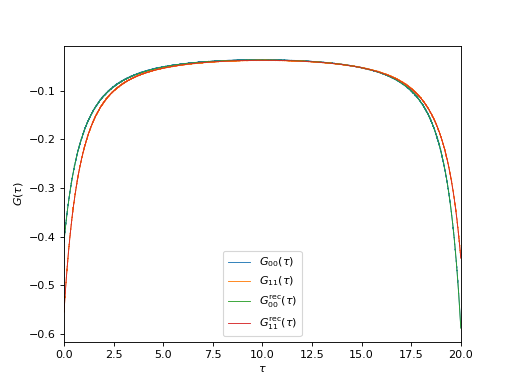

Plot input and reconstructed imaginary-time GF’s

from h5 import HDFArchive

from triqs.gf import *

from matplotlib import pyplot as plt

from triqs.plot.mpl_interface import oplot

# Read data from archive

ar = HDFArchive('results.h5', 'r')

g_tau = ar['g_tau']

g_rec_tau = ar['g_rec_tau']

# Plot input and reconstructed G(\tau)

oplot(g_tau[0, 0], mode='R', lw=0.8, label=r"$G_{00}(\tau)$")

oplot(g_tau[1, 1], mode='R', lw=0.8, label=r"$G_{11}(\tau)$")

oplot(g_rec_tau[0, 0], mode='R', lw=0.8, label=r"$G_{00}^\mathrm{rec}(\tau)$")

oplot(g_rec_tau[1, 1], mode='R', lw=0.8, label=r"$G_{11}^\mathrm{rec}(\tau)$")

plt.xlim((0, g_tau.mesh.beta))

plt.ylabel(r"$G(\tau)$")

plt.legend(loc="lower center")

(Source code, png, hires.png, pdf)

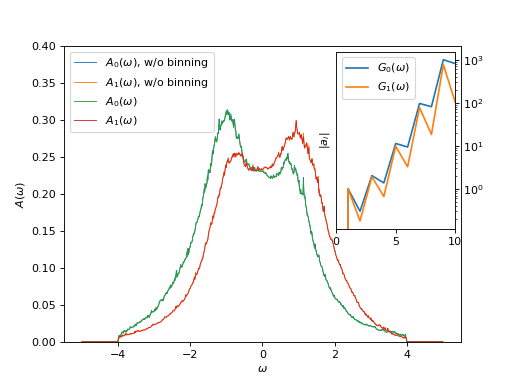

Plot spectral functions and tail coefficients

from h5 import HDFArchive

from triqs.gf import *

from matplotlib import pyplot as plt

from mpl_toolkits.axes_grid1.inset_locator import inset_axes

from triqs.plot.mpl_interface import oplot

import numpy

# Read data from archive

ar = HDFArchive('results.h5', 'r')

g_w = ar['g_w']

g_w_wob = ar['g_w_wo_binning']

tail = ar['tail']

# Plot spectral functions with and without binning

oplot(g_w_wob[0, 0], mode='S', lw=0.8, label=r"$A_0(\omega)$, w/o binning")

oplot(g_w_wob[1, 1], mode='S', lw=0.8, label=r"$A_1(\omega)$, w/o binning")

oplot(g_w[0, 0], mode='S', linewidth=0.8, label=r"$A_0(\omega)$")

oplot(g_w[1, 1], mode='S', linewidth=0.8, label=r"$A_1(\omega)$")

plt.ylim((0, 0.4))

plt.ylabel(r"$A(\omega)$")

plt.legend(loc="upper left")

# Plot tail coefficients

tail_orders = numpy.array(range(tail.shape[0]))

tail_ax = inset_axes(plt.gca(), width="30%", height="60%", loc="upper right")

tail_ax.semilogy(tail_orders, numpy.abs(tail[:, 0, 0]), label=r"$G_0(\omega)$")

tail_ax.semilogy(tail_orders, numpy.abs(tail[:, 1, 1]), label=r"$G_1(\omega)$")

tail_ax.set_xlim((tail_orders[0], tail_orders[-1]))

tail_ax.set_ylabel("$|a_i|$")

tail_ax.yaxis.tick_right()

tail_ax.legend()

(Source code, png, hires.png, pdf)

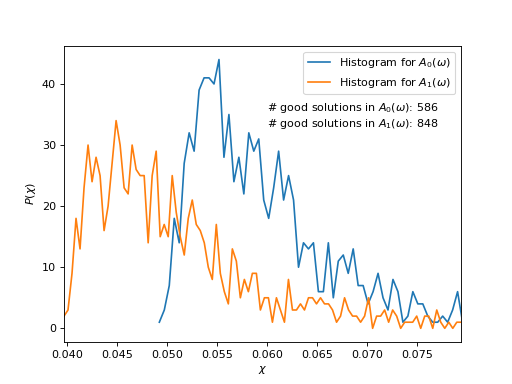

Plot \(\chi\)-histograms

from h5 import HDFArchive

from triqs.stat import Histogram

from matplotlib import pyplot as plt

from som import count_good_solutions

import numpy

# Read data from archive

ar = HDFArchive('results.h5', 'r')

hists = ar['histograms']

good_chi_abs = ar['good_chi_abs']

good_chi_rel = ar['good_chi_rel']

# Plot histograms

chi_min, chi_max = numpy.inf, 0

for n, hist in enumerate(hists):

chi_grid = numpy.array([hist.mesh_point(n) for n in range(len(hist))])

chi_min = min(chi_min, chi_grid[0])

chi_max = max(chi_min, chi_grid[-1])

plt.plot(chi_grid, hist.data, label=r"Histogram for $A_%d(\omega)$" % n)

plt.xlim((chi_min, chi_max))

plt.xlabel(r"$\chi$")

plt.ylabel(r"$P(\chi)$")

plt.legend(loc="upper right")

# Count good solutions using saved histograms

n_good_sol = [count_good_solutions(h,

good_chi_abs=good_chi_abs,

good_chi_rel=good_chi_rel)

for h in hists]

plt.text(0.06, 33,

("# good solutions in $A_0(\\omega)$: %d\n"

"# good solutions in $A_1(\\omega)$: %d") %

(n_good_sol[0], n_good_sol[1]))

(Source code, png, hires.png, pdf)